NVIDIA and GE HealthCare’s strategic push to augment radiology with physical AI

[Image courtesy of NVIDIA]

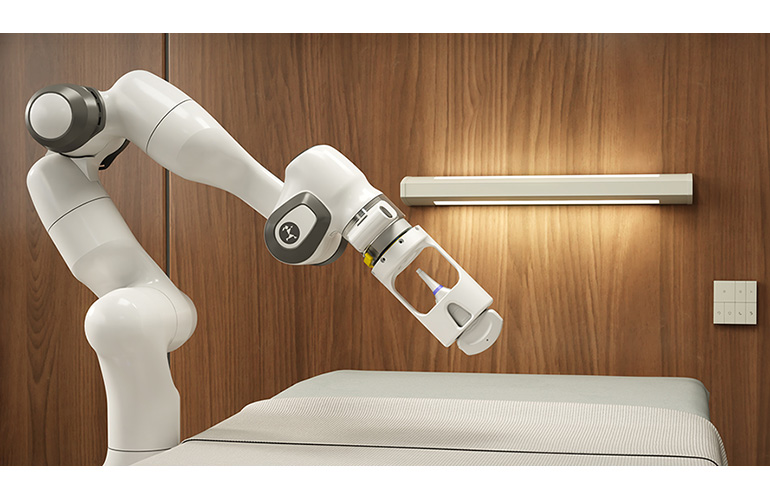

Imagine walking into a clinic after a doctor referred you to get your liver checked out. After walking down the hall of the hospital’s radiology wing, you enter a room with a robotic ultrasound system. An employee from the hospital greets you and has you lie on a table. She then states to the machine: “Please go ultrasound the liver.” The machine smoothly positions itself, acquires the images, and relays the scan to a radiologist.

This is the kind of future NVIDIA and GE HealthCare are striving to create as they join forces to develop next-generation autonomous medical imaging technologies. The news dovetails with NVIDIA’s growing interest in “physical AI” and its nearly two-decade partnership with GE HealthCare.

A high-tech solution to a growing radiology staffing shortfall

The ultimate point of such a scenario though is not novelty. It is practicality. The radiological workforce is in short supply worldwide. In the U.S., there were approximately 37,482 radiologists enrolled to provide care to Medicare patients in 2023, but expanding imaging use will likely exacerbate the problem in the coming decades. According to companion studies by the Neiman Health Policy Institute published in the Journal of the American College of Radiology, baseline imaging utilization is estimated to rise by 16.9% to 26.9% by 2055. Meanwhile, radiologist supply is projected to increase by only 25.7% during the same period. That assumes that radiology residency positions remain stagnant after 2024. Long story short, the current radiologist shortage will likely persist for the next three decades without intervention.

The aforementioned ultrasound example was not incidental. There is a shortage of sonographers, too.

Kimberly Powell

GE HealthCare and NVIDIA thus see a blend of robotics and AI as a means to supercharge radiology workflows. Kimberly Powell, vice president of healthcare at NVIDIA, paints the picture: “Part of why radiology is inaccessible is the workflow is complicated, and so how can you use AI to automate some of that workflow?” she asked.

Powell points to two ways AI can transform radiology workflows. First, through intelligent triage: “We do a ton of chest X-rays as a screening process, and call it maybe 10% of those actually have an anomaly and 90% don’t,” she explained. “Could you have an AI do that kind of first-order triage, and just put the important [scans] on top, and then get to the other ones when you have time?”

Second, through automated quality control: “AI can also quality check the image,” Powell continued. “There are many X-rays that are taken that are blurry, and the patient goes home and has to come back unnecessarily. With AI-enabled quality checking, the system can identify poor quality images immediately, allowing technicians to retake them before the patient leaves. This creates a closed-loop quality check that improves efficiency for both patients and hospitals, eliminating return visits for something that could be fixed during the initial appointment.”

Reimagining healthcare access through semi-autonomous radiology workflows

Ultrasound is routinely a life-saving diagnostic tool, yet it typically requires highly trained sonographers to operate. In many parts of the world, those experts simply aren’t available. Powell emphasizes the potential of autonomous ultrasound:

“If we want to increase the access to healthcare, ultrasound is a super life-saving device—whether scanning for thyroid cancer, looking at babies in utero, all the way through to diagnostics…. What if that ultrasound could be autonomous?”

According to a press release, NVIDIA and GE HealthCare’s collaboration addresses exactly this challenge. GE has already pioneered AI-guided ultrasound technology that provides “Park Assist”-like guidance to operators. Now they aim to take this further by developing fully autonomous X-ray and ultrasound systems that can “understand and operate in the physical world,” automating complex workflows such as patient placement, image scanning, and quality checking.

Making imaging devices autonomous—capable of operating in remote or minimally staffed locations—could dramatically reduce these barriers, enabling earlier diagnoses and more consistent follow-up care worldwide. Powell even envisions a future where patients could get thyroid scans at their local pharmacy.

The hospital as a robot

Taking the concept even further, Powell describes “physical AI” as giving medical devices—and potentially entire hospitals—the ability to perceive, reason, and act: “We’re at the point where NVIDIA has been working really hard… creating the next wave of AI we call physical AI… It’s AI that understands the physical world, and it’s AI that can be embodied in something like a robot,” she said.

She describes the hospital itself as being a sort of robot. “You could imagine speakers and sensors—maybe it’s camera technology, maybe it’s LIDAR technology—giving you a lot of physical understanding of what’s happening in the hospital.”

Isaac for Healthcare is now in early access with adopters including Moon Surgical, Neptune Medical, and Xcath. Industry partners like Ansys, Franka, ImFusion, Kinova, and Kuka are integrating their simulation tools, sensors, and robotic systems to create a comprehensive ecosystem.

Powell explains that making medical devices truly autonomous requires a three-computer platform that NVIDIA has been developing for years. “We’ve created a three-computer platform for physical AI… The first is a real-time AI computer inside the medical device… The second is the computers in the data center training up these AIs… And this third computer, we call Omniverse, is essentially the operating system for virtual worlds.”

link